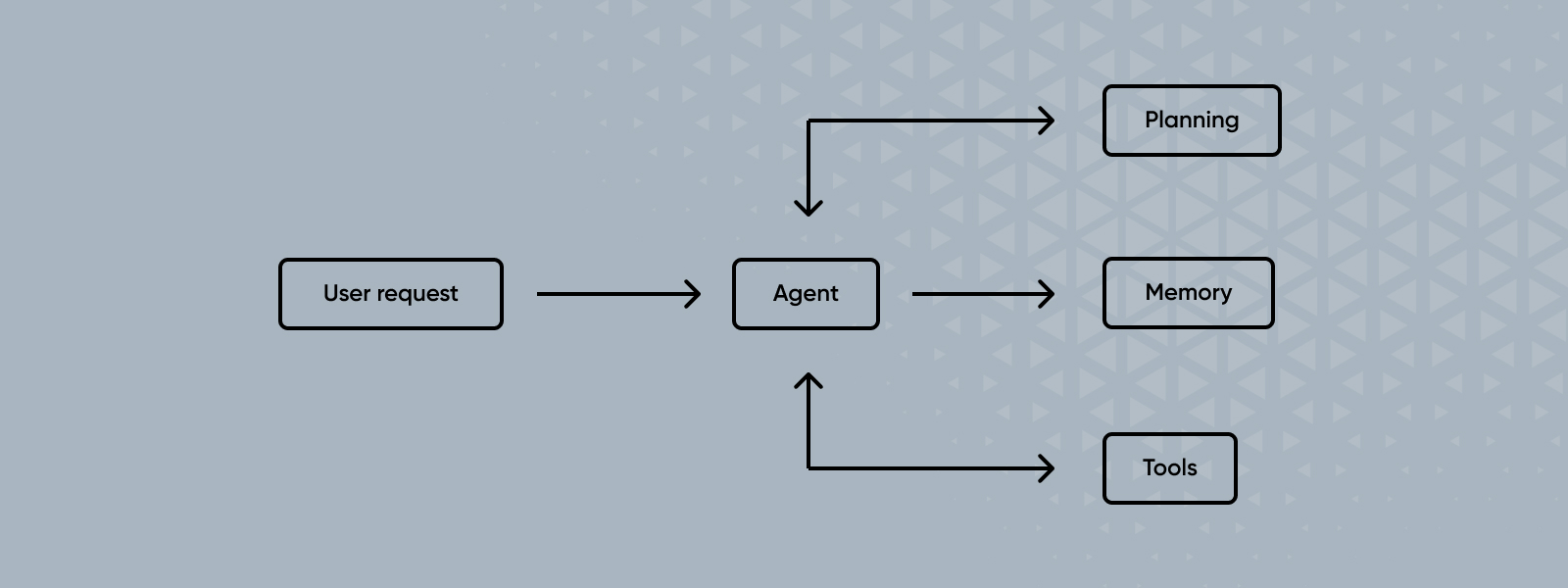

Welcome to the second article of our Agentic AI Series, where we will discuss the inner workings of AI agents. Having covered the need for agents in the previous article, this article explores the components of agents as well as some key properties that make them effective.

Components of Agentic Systems

As mentioned above, AI agents transform iterative human-AI interactions into autonomous systems. Several key components work together to form this autonomous system: Instruction, LLM, Reflection, Memory, and Tools.

Let’s take a look at these components one by one:

Instruction

Agents rely on instructions for their operation. These instructions serve as a blueprint, outlining the expected results and procedures, and helping the agent understand its tasks and goals.

LLM

This is the core reasoning engine. It processes information, generates responses, and performs the core tasks based on the instructions provided. It is essentially the “brain” of an agent.

Reflection

Reflection is mainly the part of agents that removes the need for iterative prompting by a human. When an LLM responds, the agent asks it to reflect on its response — identifying any issues or cases of not following instructions. It turns out this reflection process can significantly improve results even though it is the same LLM that is prompted repeatedly. Here’s an example of how a coding agent might utilise reflection given a task:

- Start by asking for the code for the task: “Please write code for {task}.”

- After receiving the resulting code, prompt the LLM to review the code for any issues: “Read the following code for {task}, review it for correctness, style, and efficiency, and indicate if there are any errors present: {<code>}.”

- Based on the feedback provided, ask the LLM to make the necessary changes: “Make the following changes in the given code. Changes: {<suggested changes>}. Code: {<code>}.”

- Repeat this process — review and revise — until no further changes are suggested.

Memory

Since agents involve multiple iterations of prompting and refining outputs, they require memory to track past results. There are 2 types of memory mainly used:

- Short Term memory: Temporary storage that holds information relevant to the current task, allowing the agent (or multiple cooperating agents) to remember and learn from intermediate results.

- Long Term Memory: Persistent storage that retains information from past executions. It can also include self-evaluations of past performance, enabling the agent to continuously improve over time.

Tools

Tools are what really empower AI agents. They significantly enhance the capabilities of agentic systems over simple LLMs by allowing these systems to interact with the external world and perform actions beyond their inherent capabilities. These tools, also referred to as skills, capabilities, or other terms in various frameworks and literature, can include APIs, code executors, data analysis tools, and internet searchers. For example:

- Weather API: Can be used by a travel planner agent to fetch current weather information for better planning decisions.

- Python Code Executor: Can be utilised for tasks involving data analysis or complex computations.

- Wolfram Alpha: Can be used for mathematical queries.

Properties That Make A Great AI Agent

To be effective, an AI agent should possess certain properties. These attributes should be kept in mind while designing agentic systems to ensure optimal performance.

Here are the seven key properties explained in detail:

1. Role Playing

Agents perform best when they have a clear and well-defined role. The more specific the role, the better the agent can understand and fulfil expectations. For instance, assigning an agent the role of a “FINRA (Financial Industry Regulatory Authority) approved financial analyst” is much more effective than a generic “data analyst”. To enhance the role, include a backstory or context along with the description.

2. Focus

Information overload can be detrimental to LLMs. Too much context or irrelevant details can lead to hallucinations, as the model might struggle to focus on the relevant information. It’s crucial to provide focused instructions, context, tools, and guardrails to keep the agent on track. It is also best to divide tasks into smaller subtasks and assign them to specialized agents, rather than relying on a single generalized agent with a broad role.

3. Tools

It is pertinent to provide an agent with the tools needed for its assigned task, but only the relevant ones. Supplying all available tools can lead to the agent using an unsuitable tool or one that is not important for the task, resulting in inefficiency and possibly hallucinations.

4. Cooperation

Being able to interact with other agents — such as passing on or delegating tasks, utilizing the expertise of others, or incorporating feedback —helps boost agents’ performances significantly. This approach, known as a Multi-Agent System, allows agents to operate autonomously without human interaction, as they exchange ideas and solve problems collaboratively.

A fascinating example of a multi-agent collaboration system is proposed in the paper titled “ChatDev: Communicative Agents for Software Development” (https://arxiv.org/abs/2307.07924). The paper introduces a completely autonomous software engineering system, as illustrated below, with agent teams for different phases of the software development lifecycle, such as ‘designing,’ ‘coding,’ and ‘testing.’ Additionally, an agent acts as a CEO to manage all teams and keep processes streamlined.

5. Guardrails

Guardrails are needed as AI systems can produce outputs with varying formats, accuracy, and sometimes even inappropriate content. They act as safety measures, ensuring the agent’s outputs meet specific quality standards and adhere to ethical guidelines. Guardrails can also prevent the agent from getting stuck in loops by limiting the number of iterations, controlling which tools it can use, and restricting the agents it can collaborate with.

6. Memory

A key aspect of intelligence is the ability to learn and improve, which depends on memory. By remembering past experiences — such as inputs, actions, and results — the agent can make better decisions in future tasks. This ongoing learning process is key to developing truly intelligent AI agents.

7. Planning

Planning involves breaking down processes into small steps. Encouraging LLMs to think through and plan before starting to answer or solve the problem at hand actually leads to better results. Here’s an example of how an agent may plan a task as explained in paper titled “HuggingGPT: Solving AI Tasks with ChatGPT and its Friends in Hugging Face” (https://arxiv.org/abs/2303.17580 ):

Task: Given an image of a boy, generate an image of a girl in the same pose, reading a book, and create a voice snippet to describe the new image.

Plan:

- Pose Determination: Determine the boy’s pose in the initial image.

- Pose-to-image: Generate an image of a girl in the same pose, reading a book.

- Image-to-text: Describe the new image using text.

- Text-to-speech: Convert the text description to a voice snippet.

Creating effective AI agents requires careful consideration and implementation of the key properties discussed above. Interestingly, these qualities are also what you might look for in an ideal job candidate.

In our next article in the Agentic AI Series, we’ll dive into the practical implementation of AI agents using a framework called Crew AI.